centos7 部署kubernetes

| HOSTNAME | IPADDRESS | CPU | Mem | Disk | OS |

| master | 10.10.0.180 | 2 | 4G | 100G | centos7 |

| node1 | 10.10.0.181 | 2 | 4G | 100G | centos7 |

文档中没有特别指出的节点,所有节点都得执行。

环境准备(所有节点执行)

主机名解析

echo "10.10.0.180 master" >>/etc/hosts

echo "10.10.0.181 node1" >>/etc/hosts

关闭防火墙

systemctl stop firewalld

systemctl disable firewalld

关闭sawp

swapoff -a

sed -ri 's@(.*swap.*)@#\1@g' /etc/fstab

内核修改

开启内核ipv4转发需要执行如下加载 br_netfilter 模块

modprobe br_netfilter

创建/etc/sysctl.d/k8s.conf文件,添加如下内容:

cat >/etc/sysctl.d/k8s.conf <<EOF net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_forward = 1 vm.swappiness = 0 EOF sysctl -p /etc/sysctl.d/k8s.conf

安装IPVS

为了便于查看ipvs的代理规则,需要安装管理工具ipvsadm

yum install ipset ipvsadm

加载ipvs模块

cat >/etc/sysconfig/modules/ipvs.modules <<EOF #!/bin/bash modprobe -- ip_vs modprobe -- ip_vs_rr modprobe -- ip_vs_wrr modprobe -- ip_vs_sh modprobe -- nf_conntrack_ipv4 EOF

chmod 755 /etc/sysconfig/modules/ipvs.modules

bash /etc/sysconfig/modules/ipvs.modules

lsmod |grep -e ip_vs -e nf_conntrack_ipv4

上面脚本创建了的/etc/sysconfig/modules/ipvs.modules文件,保证在节点重启后能自动加戮所需模块。使用lsmod |grep -e ip_vs -e nf_conntrack_ipv4命令查看是否已经正确加载所需的内核模块。

时钟同步

yum install chrony -y

systemctl enable chronyd --now

chronyc sources

安装docker

配置docker的yum源

yum remove docker* -y && yum install -y yum-utils

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

安装docker

yum install docker-ce -y

启动docker

systemctl start docker && systemctl enable docker

安装集群工具 kubelet、kubectl、kubeadm

配置kubernetes镜像源为阿里云

cat >/etc/yum.repos.d/kubernetes.repo <<EOF [kubernetes] name=Kubernetes baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64 enabled=1 gpgcheck=0 repo_gpgcheck=0 gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF

kubeadm:初始化集群命令

kubelet:在集群中的每个节点上用来启动Pod和容器等

kubectl:用来与集群通信的命令行工具

yum install kebectl-1.24.3 kubeadm-1.24.3 kubelet-1.24.3

检查版本是否正确

kubeadm version

kubeadm version: &version.Info{Major:”1″, Minor:”24″, GitVersion:”v1.24.3″, GitCommit:”aef86a93758dc3cb2c658dd9657ab4ad4afc21cb”, GitTreeState:”clean”, BuildDate:”2022-07-13T14:29:09Z”, GoVersion:”go1.18.3″, Compiler:”gc”, Platform:”linux/amd64″}

启动kubelet 并加入开机自启

systemctl enable kubelet

初始化集群

下载docker镜像(所有节点执行)

1)通过命令获取对应集群需要使用的容器镜像

kubeadm config images list --kubernetes-version=v1.24.3 --image-repository registry.aliyuncs.com/google_containers

k8s.gcr.io/kube-apiserver:v1.24.3

k8s.gcr.io/kube-controller-manager:v1.24.3

k8s.gcr.io/kube-scheduler:v1.24.3

k8s.gcr.io/kube-proxy:v1.24.3

k8s.gcr.io/pause:3.7

k8s.gcr.io/etcd:3.5.3-0

k8s.gcr.io/coredns/coredns:v1.8.6

2)拉取镜像

kubeadm config images pull --kubernetes-version=v1.24.3 --image-repository registry.aliyuncs.com/google_containers

注:安装docker或者containerd之后,默认在/etc/containerd/config.toml禁用了CRI,需要注释掉disabled_plugins = [“cri”],重启containerd systemctl restart containerd,否则执行kubeadm进行部署时会报错:

output: E0614 09:35:20.617482 19686 remote_image.go:218] “PullImage from image service failed” err=”rpc error: code = Unimplemented desc = unknown service runtime.v1alpha2.ImageService” image=”registry.aliyuncs.com/google_containers/kube-apiserver:v1.24.0″

time=”2022-06-14T09:35:20+08:00″ level=fatal msg=”pulling image: rpc error: code = Unimplemented desc = unknown service runtime.v1alpha2.ImageService”

初始化Master节点(master)

kubeadm init --apiserver-advertise-address=10.10.0.180 --image-repository registry.aliyuncs.com/google_containers --kubernetes-version v1.24.3 --service-cidr=10.96.0.0/16 --pod-network-cidr=192.168.0.0/16

–apiserver-advertise-address 宣告 APIServer节点地址,建议使用域名

–image-repository 指定镜像仓库(要确保和此前下载镜像的仓库地址保持一致)

–kubernetes-version 指定k8s版本

–service-cidr 指定service运行网段(内部负载均衡网段)

–pod-network-cidr 指定pod运行网段(后续的网络插件需要分配这个地址段IP)

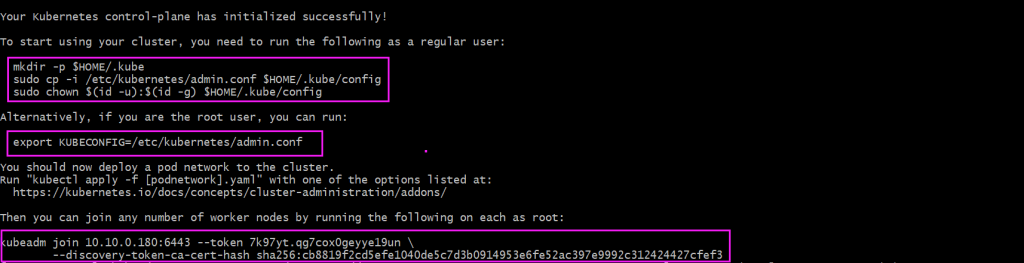

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

export KUBECONFIG=/etc/kubernetes/admin.conf

初始化Node1节点(node1)

kubeadm join 10.10.0.180:6443 --token 7k97yt.qg7cox0geyye19un --discovery-token-ca-cert-hash sha256:cb8819f2cd5efe1040de5c7d3b0914953e6fe52ac397e9992c312424427cfef3

查看节点状态信息(master)

kubectl get nodes NAME STATUS ROLES AGE VERSION master NotReady control-plane 10m v1.24.3 node1 NotReady 83s v1.24.3

安装flannel网络插件(mater)

为了让K8S集群的Pod之间能够正常通讯,必须安装Pod网络,Pod网络可以支持多种网络方案,可以在Kubernetes的Addons插件中进行选择。

下载插件

wget https://raw.githubusercontent.com/flannel-io/flannel/master/Documentation/kube-flannel.yml

修好插件分配的地址段范围

sed -i 's#10.244.0.0/16#192.168.0.0/16#g' kube-flannel.yml

应用插件

kubectl apply -f kube-flannel.yml

查看node状态

[root@master ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION master Ready control-plane 20m v1.24.3 node1 Ready <none> 11m v1.24.3

集群命令自动补全

yum install bash-completion -y

echo "source <(kubectl completion bash)" >>~/.bashrc